LLM Firewall

Focus your resources on innovating with Generative AI, not on securing it

Generative AI introduces a new array of security risks

We would know. As core members of the OWASP research team, we have unique insights into how Generative AI is changing the cybersecurity landscape.

Prompt Leak

Prompt Leak is a specific form of prompt injection where a Large Language Model (LLM) inadvertently reveals its system instructions or internal logic. This issue arises when prompts are engineered to extract the underlying system prompt of a GenAI application.

Jailbreak

Jailbreaking, a type of Prompt Injection refers to the engineering of prompts to exploit model biases and generate outputs that may not align with their intended behavior, original purpose or established guidelines.

Insecure Plugin Design

LLM plugins are extensions that, when enabled, are called automatically by the model during user interactions. They are driven by the model, and there is no application control over the execution. Furthermore, to deal with context-size limitations,

Legal Challenges

The emergence of GenAI technologies is raising substantial legal concerns within organizations. These concerns stem primarily from the lack of oversight and auditing of GenAI tools and their outputs,

Indirect Prompt Injection

Indirect Prompt Injection occurs when an LLM processes input from external sources that are under the control of an attacker, such as certain websites or tools. In such cases, the attacker can embed a hidden prompt in the external content,

Privilege Escalation

As the integration of Large Language Models (LLMs) with various tools like databases, APIs, and code interpreters increases, so does the risk of privilege escalation. This GenAI risk involves the potential misuse of LLM privileges to gain

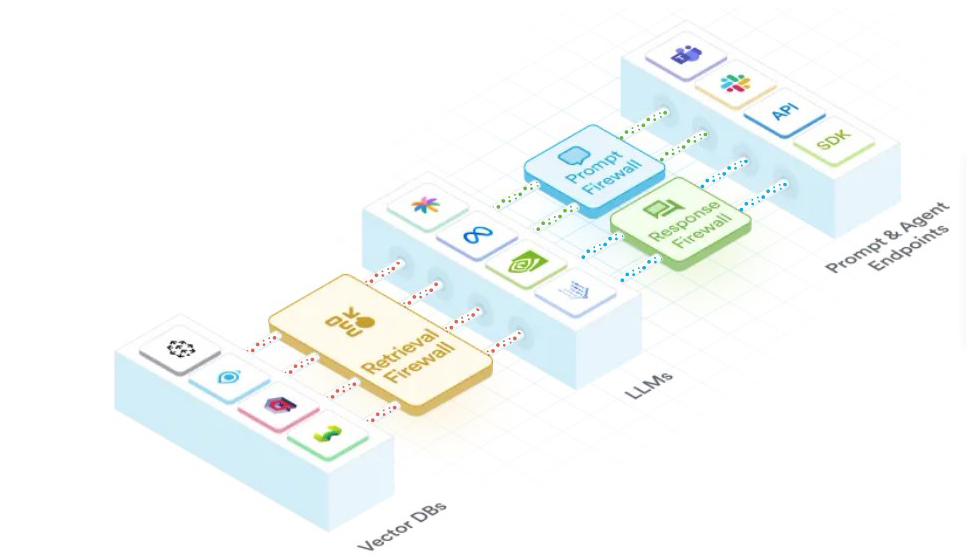

PromptHeal Defends Against

GenAI Risks All Around

A complete solution for safeguarding Generative AI at every touchpoint in the organization

Eliminate risks of prompt injection, data leaks and harmful LLM responses

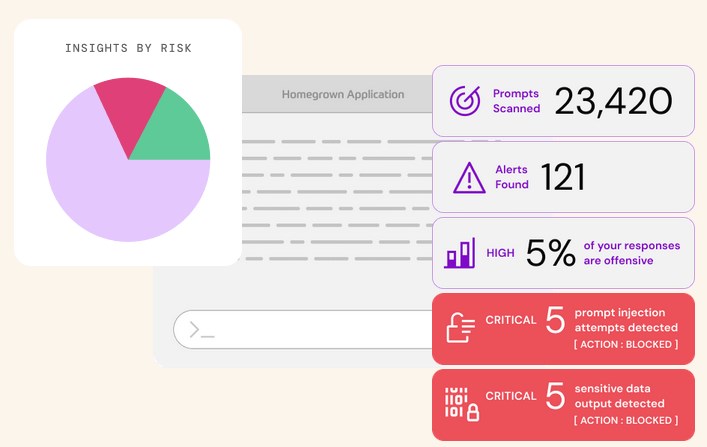

Promptheal for Homegrown GenAI Apps

Unleash the power of GenAI in your homegrown applications without worrying about AI security risks.

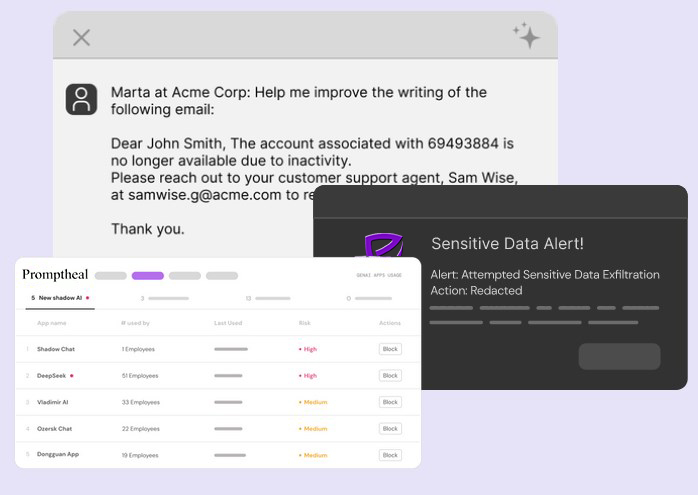

Prevent shadow AI and data privacy risks

PromptHeal for Employees

Enable your employees to adopt GenAI tools without worrying about Shadow AI, Data Privacy and Regulatory risks.

Avoid exposing secrets and intellectual property through AI code assistants

PromptHeal for Developers

Securely integrate AI into development lifecycles without exposing sensitive data and code.

EASILY Deploy IN MINUTES & get instant protection and insights

Enterprise-Grade GenAI Security

Any usecase we will develop AI Automation solutions for you also we modernize your existing application

Our Clients

Trusted by Industry Leaders

Meet Our Team

Mahendra Ribadiya – India

Founder & CTO

Lionel Means III – USA

Project Manager

Make Move

See how Gen AI can solve your business problems. Speak to a Vipcodder AI consultant today.

Trusted by the biggest brands